Figure 2: Examples of a convolutional neural network that is capable of providing real-time predictions during a laparoscopic cholecystectomy for the GO zone (safe area to dissect) and NO-GO zone (dangerous area to dissect in with a high likelihood of major bile duct injury). Figures courtesy of Dr. Amin Madani.

Figure 3: E-learning platform, Think Like a Surgeon, where trainees are asked to make annotations on surgical videos to answer specific questions (for example, “Where would you dissect next?”; “Where do you expect to find a particular anatomical plane/structure?”). Green annotation denotes the trainee’s mental model of the course of the left recurrent laryngeal nerve during a thyroidectomy. Expert responses are shown as a heat map for immediate real-time feedback. Figure courtesy of Dr. Amin Madani.

Similarly, given the widespread availability of advanced digital cameras, image guided surgery, and high definition video feeds, video-based assessment has gained increasing momentum as a method for assessing surgical performance.11–13 New data suggests that computer vision can be used to identify specific phases of an operation or to assess specific events during a surgical video (for example, achievement of a Critical View of Safety) with moderate accuracy.14,15 Ongoing multicenter studies are validating the performance of AI systems to assess surgical performance and it is reasonable to expect that this will be a fundamental component of credentialing and licensing in the future.

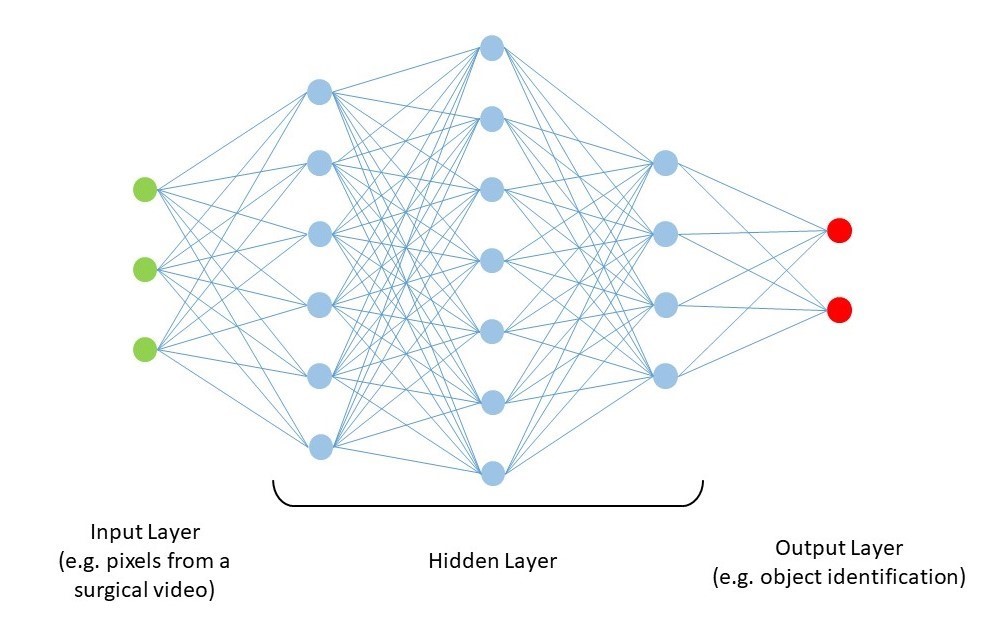

Despite the incredible potential of this technology to benefit surgical education, it must be noted that few, if any, studies have been performed to specifically evaluate the use of AI for video based review of technical performance. The lack of literature in the area of automated assessment is not surprising. Modern AI techniques, such as deep learning, do have potential to identify features in the video that could be predictive of performance; however, such results are dependent on having a clear definition of the target performance to be achieved. Some work has been published on potential performance targets that could be automated. Hung et al. published work suggesting that automated measures such as kinematic data from robotic systems can correlate with outcomes16 and can differentiate expert and “super expert” surgeons performing robotic prostatectomy.17 Combined with work from Birkmeyer et al. and Curtis et al. suggesting correlation between surgical skill and patient outcomes, it is reasonable to hypothesize that analysis of objective measures of performance could be automated.11,13 However, as discussed below, valid metrics will need to be identified through large scale research collaborations that can generate the representative data to allow for generalization of results.

Challenges for Using AI for Surgical Education

Despite the promises of AI for digitizing and improving surgical training, there are many pitfalls. Any automated surgical coach needs to undergo robust studies to demonstrate validity evidence, and the higher the stakes of the assessment (for example, licensing and credentialing), the more this data needs to be valid, reliable, and legally defensible. Moreover, if a neural network is designed to reproduce a specific human behavior or aspect of an operation, there needs to be evidence that this specific item is representative of the underlying construct of surgical expertise. As Russel and Norvig famously stated:

“If a conclusion is made that an AI program is one that thinks like a human, it becomes imperative to understand exactly how humans think. Human behaviour can therefore be used as a ‘map’ to guide the performance of an algorithm.”18

Qualitative and mixed method studies are often useful to establish the competencies that are the most high yield when designing an AI model.19-21

There are also many nuances in surgery that make computer vision more challenging than other fields. For instance, detecting anatomical structures and planes of dissection (semantic segmentation) is not simple since most surgical anatomy is covered by fatty and fibrous tissue, and object detection for hidden structures without clear boundaries is difficult. Furthermore, training a network on a dataset that has already been labeled or annotated by experts needs to have a required gold standard and experts will often have opposing assessments and conclusions. While it is logistically easier to achieve expert consensus for classification tasks (for example, whether or not there is a suspicious cancerous lesion), obtaining expert consensus on the boundaries of objects within the surgical field is more challenging. To overcome this, various tools, such as the Visual Concordance Test, have been used to achieve consensus based on a panel of expert annotations on the surgical field, and this convergence of annotations is the gold standard consensus that is subsequently used to train a network.22,23

Another challenge is obtaining adequate data to train a network to achieve high level performance. The lack of availability of surgical videos can make it difficult to train a network with enough instances and examples so that it can make accurate predictions in new and novel cases. Finally, as these applications have shown potential in other areas, a common criticism is the difficulty to understand how an algorithm makes a decision, which can be problematic in the context of education where a successful relationship between a teacher and a learner is based on trust, transparency, and feedback.24 However, growing interest in explainable AI (i.e., AI for which an algorithm can also present relevant examples justifying its decision) is leading to improved methods of “opening the black box” of deep learning.25

Ethical and Legal Considerations

As physicians, we do not have an outstanding record of proper introduction of new technology or innovation into our field. We often take things at face value and start making conclusions from findings without rigorous assessment. Furthermore, we traditionally undervalue our own bias and need to protect our intellectual property. As AI and deep learning permeate throughout various facets of surgical care, it behooves us to reverse this trend.26

Surgeons need to understand that there is tremendous value and interest in raw, unedited operative videos. As we make decisions in this field, we need to recall two things: first, such video needs to be recorded, shared, and used in an appropriate manner for research, education and quality improvement.26 Second, there is a tremendous opportunity for all members of the surgical community to learn from such multimedia, both on a personal and on a communal basis. As this data becomes increasingly available and is applied for many purposes to improve surgical care, it is also critical to be cognizant that there is much industry demand and access needs to be regulated with a high degree of scrutiny. Procedures need to be followed to maintain data security and integrity of data, often complicating the management of the data itself. Surgeons need to be actively involved in the use, maintenance, and production of algorithms that are derived from these large repositories of datasets.

In addition, the actions taken from the application of algorithmic use of machine learning and AI need to be done wisely. There is tremendous potential when done correctly, for formative feedback, technical assessment, minimizing work and learning from big data. Nevertheless, if used for high-stakes decision making, such as credentialing or patient care, AI needs to be done in a programmatic and evidence-based fashion similar to the introduction of other innovative technology.27 For example, despite the fact that deep computer vision can detect radiological findings, such as suspicious breast lesions, with a high degree of accuracy, it does not imply that such algorithms can simply replace radiologists.28 Variations in technique, patient factor, healthcare systems, disease states, and severity of illness dictate the need to train algorithms on a large dataset, eliminate potential algorithmic biases and validate them using best practices.

Disclosures

Drs. Amin Madani, Adnan Alseidi, and Maria Altieri have no proprietary or commercial interest in any product mentioned or concept discussed in this article. Dr. Daniel Hashimoto is a consultant for Johnson & Johnson Institute, Verily Life Sciences, Worrell, and Mosaic Research Management. He has received grant funding from Olympus. He has a pending patent on deep learning technology related to automated analysis of operative video.